In the 70s, there was a lot of unrest accompanied by paranoia over a theory of behavior surfacing in psychology. Never mind that the theory had been first proposed in 1913 by John B. Watson, refined a few years later by Edward Thorndike, and modified and expanded by B.F. Skinner in his experiments in the 1950s and 60s.

Things can move slowly in academia. And they usually seep out of academia as slowly as molasses on a cold day with plenty of opportunity along the way to misconstrue and mischaracterize the theory. B.F. Skinner’s theory of operant conditioning was really very simple. Behavior can be changed by the result — whether reward or punishment — it produces.

Well, duh. We use operant conditioning every time we put a child in time out. Teachers use operant conditioning when they reward a child with a gold star because he didn’t interrupt story time for a whole week. It’s operant conditioning that convinces us to get up and go to work everyday.

Guess who else we train using operant conditioning? That's right! Our pets. The negative reinforcement of being put outside every time a puppy piddles on the floor teaches him to go outside in the first place. The positive reinforcement of dog treats is what helps us teach our canine companions to follow our commands. Not to mention the love and attention that we show our pups when they've completed a job well done conveys our expectations of good behavior.

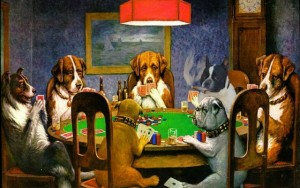

But, what has all this operant conditioning done to our pets? Has it turned them into gambling addicts?

Is Your Dog a Gambling Addict?

When Skinner first introduced his theory, you would have thought the sky was falling. He was slandered, not only for his theory — a scary one for many people who seemed to think they would be turned into automatons with no free will — but also for the fact that he advocated that human behavior should be reinforced in such a way as to promote harmony and justice. Also, he raised one of his children in a box — literally. (By the time mine were teenagers, I was a fan.)

Skinner didn’t invent operant conditioning. It's been at work since we came out of the caves, if not before. “Grok, if you don’t give me that piece of sloth meat right now, I’m going to bop you over the head with this here club. And that goes for the next time and the next time and all the next times.”

If Grok doesn’t give Thug his piece of sloth, the result is that he’s bopped on the head and left with a headache to remember the lesson by. But if he gives Thug his dinner, he’s rewarded with no lump on his head and no headache. He gives Thug his dinner. That’s operant conditioning.

Skinner’s theory caused public paranoia like nothing I’ve seen before or since. People were denouncing him as a fascist. He was accused of having communist tendencies for what the general public was convinced was his recommendation that people be conditioned to blindly follow the government who had of course done the conditioning in the first place.

Even Dr. Spock, the pediatrician who wrote Baby and Child Care, which became the bible for mothers of children my age, chimed in.

“I'm embarrassed to say I haven't read any of his work, but I know that it's fascist and manipulative, and therefore I can't approve of it.”

Maybe it was the baby-in-a-box thing.

Regardless, it’s important to remember that this was not exactly a rational time. Hippies were advocating drug use and free love. These were the same people who as children grew up in an aura of constant fear of atomic warfare with the Russians and were taught that if they knelt facing a wall, put their heads down, and interlaced their fingers behind their neck, they would be protected from the bombs that would surely drop very soon. It was around the time of the hippies that we learned that the government had been lying to us.

RELATED: My Low-Calorie Super Food Secret to Training a Dog

Skinner’s operant conditioning theory was upsetting everyone, except maybe for those of us who saw the face-the-wall-kneel-down-cover-your-neck instruction for what it was. (Besides, many of us thought that most of the rest of our society were automatons anyway.)

The theory in more detail

The theory of operant conditioning was a little more complicated than believing behavior can be changed through the presence or absence of reward and punishment. There were other factors that entered into it.

Although the theory has more details than I’m going to give here, basically it says that learning a behavior is influenced by the type of result the behavior causes (whether the result is positive or negative and how strong the result is) and the rate at which the result occurs (an amount of time between results that is fixed — the result occurs on a predetermined and regular schedule — or unscheduled — you never know when the result will occur).

RELATED: Top 10 Must Have Dog Training Supplies

Gambling and operant conditioning

Okay, you say, but how does this apply to gambling?

The way a behavior is taught (or extinguished — getting rid of an undesirable behavior) determines how quickly it’s learned and how quickly it’s forgotten. The process of producing a learned behavior is usually begun by providing continuous results. After the behavior is learned, however, either a fixed rate or an unscheduled rate of providing results is employed to cement the behavior.

Which method of teaching produces behavior that lasts for the longest time and is the hardest to forget? Unscheduled. Why? Because during training, you never know when the result would occur so now you keep performing the behavior over and over in hopes that this will be the time you get the result you want.

Why do people gamble (the behavior)? To get money (the result). How often do they get the money? They never know (unscheduled result). Why do they keep gambling (in hopes of getting a positive result — the next hand might be the one).

How does a dog become a gambling addict?

Okay, you say. I see how operant conditioning can make a person addicted to gambling, but my dog can’t hold a hand of poker or pull the lever on a slot machine.

Operant conditioning applies to all behaviors, human, animal, insect, bacteria (usually it’s Mother Nature who provides the result). Every time you’ve taught your dog to perform a trick or taught him not to do something, you’ve used operant conditioning.

Even if the result he earns is only praise and a pat, his behavior has produced a positive result, and he’s more likely to perform that behavior again. If you’ve scolded him for pottying in the house (not a very effective method of housebreaking, I know, but I needed an example), you’ve produced a negative result, and he will be a little less likely to break housebreaking training again.

But what if you didn’t praise him every time he performed the desired behavior but only every few times he performed it? Would he stop?

No. The theory of operant conditioning says he will continue to perform that behavior over and over again, especially if he previously received the result on a random schedule. After all, he never knows when he’ll get the treat, the pat on the head, or the loving words. He’ll keep trying and trying. Maybe this will be the time!

RELATED: How to Housebreak a Puppy Effectively

How does that make him a gambling addict?

I'm going to use a personal story to answer this.

As some of you know, I have seven dogs — six papillons and a rescue. My husband has a shop, a freestanding building, in our backyard. Every time I let the dogs out, they go running to his shop to beg for a dog treat.

This really irritates him. As he says, he’ll be involved in the most intricate, delicate part of a project (and from the amount of complaining he does, there must be a whole lot of intricate, delicate parts in every one of his projects), and seven dogs start swarming around him, barking, weaving in and out between his feet, and balancing on their back legs to get a head up (no pun intended) on the others.

So I came up with a plan.

Before I let the dogs out, I said, I would call him and let him know. He could then close the door to his shop so they couldn’t get in to annoy him. Pretty soon, when they were convinced they weren’t going to get a treat by running out to his shop, indeed weren’t even going to get into the shop to beg, they’d stop bugging him. But I told him he had to do it every time; otherwise, it wouldn’t work.

Simple, right? He shut his door and didn’t answer their barks and cries on the first two or three occasions. But before they even had a chance to suspect they might not get the result they wanted by running out to his shop, he opened the door, invited them in, and gave each of them a treat. From then on, sometimes he would ignore them, but every once in a while he’d invite them in and give each of them a treat. “I’ll start next time,” he reassured me.

RELATED: The Best Dog Treats

So now we have seven dogs who cannot be convinced that running to his shop, barking, and scratching on his door will not get them the result they want. He rewarded them at random for their behavior, and his unscheduled reinforcement of that behavior cemented it into their brains.

Now they gamble that this will be the time he lets them in and gives them a treat. It’s a learned behavior that will take moving heaven and earth to “unlearn” (and I'm sure he’ll undo any progress I’ve made every time he gets the chance). He still wants me to call him when I let the dogs out, but why should I? The dogs have him trained, and they didn’t even try.

The moral of this story is that when you’re training your dog, be consistent. You don’t have to be regular, but you do have to be consistent. Provide a particular result for only the behavior you want learned or extinguished, and do not provide that same result for any other behavior.

When trying to teach a behavior or extinguish one, don’t confuse your dog (or your husband) by offering a result for one behavior and then offering the same result for the opposite behavior. And one more piece of advice…don’t let your husband teach your dog to gamble.

READ NEXT: 10 Psychological Tricks To Train Your Dog